4Q4 Podcast, Grants, Interviews

Author and human rights advocate Alexa Koenig explains how the Berkeley Protocol will empower the next generation of human rights defenders.

Digital Impact 4Q4: Alexa Koenig on Expanding Digital Open Source Investigations

SUBSCRIBE TO THIS PODCAST ON iTUNES. TRANSCRIPT BELOW.

Jason Madara

00:00 CHRIS DELATORRE: This is Digital Impact 4Q4, I’m Chris Delatorre. Today’s four questions are for Alexa Koenig, Executive Director of the Human Rights Center at UC-Berkeley. In 2018, with help from a Digital Impact Grant, the Center established guidelines used to inform the Berkeley Protocol, an ambitious undertaking to standardize digital open source information for criminal and human rights investigations.

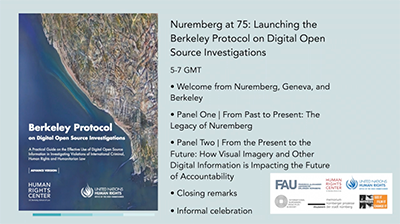

The Protocol explores how digital information is gathered across a myriad of platforms in order to address human rights violations and other serious breaches of international law, including international crimes. This month, the Center released the Protocol in partnership with the United Nations Human Rights Office on the 75th anniversary of the Nuremberg Trials.

00:58 CHRIS DELATORRE: Alexa, establishing legal and ethical norms is critical to this process. How is digital information useful for justice and accountability purposes and how much of this are we seeing in the courts right now?

01:12 ALEXA KOENIG: Thanks, Chris, first of all, so much for having me. I’m really excited to have a chance to speak with you a little bit about what we’ve been doing at the Human Rights Center at UC Berkeley with support from the Digital Impact grant. To answer your question, how digital information can be useful for justice and accountability, I think the reason it’s becoming increasingly important for that purpose, and for documenting and getting justice for human rights violations, mass atrocities, and war crimes, really boils down to one fundamental thing. More and more of our communication, of course, is happening across digital platforms and in online spaces. So a lot of the communication that helps us to build war crimes cases and human rights cases, is actually happening now in digital space.

The gold standard for getting someone convicted of an international crime is to gather really three kinds of evidence. The first is testimonial information, which is what people have to say about what’s happened to them or to their communities. The second is physical information, which would be, of course, the murder weapon or the soil samples, if there’s allegations of a chemical weapons attack. And the third is documentary evidence. Historically, this would have been contracts that were issued or written commands being given from a high-level person to the people on the ground in a conflict zone. But more and more, what we’re seeing are posts to sites like Twitter or Facebook, or their counterparts in other parts of the world. Ideally, as a war crimes investigator or human rights investigator, you find information from all of those sources. And hopefully, they point in the same direction with regards to the who, the what, the when, the where, the why, and how of what’s happened. For international criminal investigators, we need all those things, and to not only be able to explain how something happened and who’s responsible, but how do we know the facts that we are claiming are in fact true?

With regards to how much we’re seeing in the courts right now, it really depends on which courts you’re talking about. So domestically, we’ve certainly seen a rise in the introduction of social media content over the past 15 years. But with international courts, like the International Criminal Court, we’ve really seen a growing awareness since about 2014, 2015. And there have been two milestone cases that have really demonstrated how powerful social media content can be for court processes. The first was what’s referred to as the Al-Mahdi case. And it was a case that was brought in the International Criminal Court to try and get justice for destruction of cultural heritage property in Mali. In that particular situation, the prosecution used satellite imagery and other digital information that you could get out there to try and establish where these crimes happened and who might have been responsible.

A second milestone really came in 2017, and again, in 2018, when an arrest warrant was issued for a guy named Al-Werfalli from Libya, who was accused of the extrajudicial killing of 33 people across that state. So because that became the basis for the warrant of arrest, I think much of us in the international justice community are sort of holding our breath to see how the court ultimately deals with information in those two cases. In the Al-Werfalli situation, the basis for the arrest warrants was seven videos that were posted to social media. So it’ll be the first time we really get a chance to see how powerful those kinds of videos and that kind of social media content can be for establishing an evidentiary record.

Finally, I think we’ve really seen it also in the international Criminal Court’s strategic plan, their last two strategic plans, which have established that they need to be building more capacity within the courts to do these sorts of investigations. A war crimes case might take five, 15, even 30 years before the atrocity lands in a court of law. And I think as more and more cases begin to pile through the system—and we’ve seen more and more international cases that are being brought for atrocities that happened in the era of social media—we’re going to see that this kind of content has increased in value for justice and accountability purposes.

05:10 CHRIS DELATORRE: Developing a protocol like this must require collaboration across cultures and professions—no small task in this current social and political climate. According to the Protocol, one of the biggest challenges is an overwhelming amount of information online, some of which may be compromised or misattributed. Your question of how do we know what we’re claiming is true really resonates, especially now. I mean my mind goes straight to social media—disinformation, computational propaganda, cyber harassment. How do you preserve the chain of custody for this kind of information? In other words, how do you find the info you need on social media, and then how do you verify the content is real? Are you currently working for instance with private tech companies to ensure efficacy across existing platforms?

06:04 ALEXA KOENIG: You raise such an important point that the information ecosystem in which we’re working as we try and find evidence of human rights violations and war crimes is one that’s really replete with misinformation and disinformation today. And so, I think a very healthy skepticism on the part of the legal community, and particularly of judges, is, how do we know that a video that claims to be from Syria, say in 2018, is in fact from Syria, is in fact from 2018, and can be helpful to proving the facts that it claims to help establish? In terms of how we find that content, it’s obviously a really tricky environment in which to work. We now have over 6000 tweets going out every second, more than 500 hours of video being posted to YouTube every minute. So I have a colleague who’s explained that trying to find relevant information is a little bit like trying to find a needle in a haystack made out of needles.

So that takes a tremendous amount of creativity. And as you said, collaboration to, and really cooperation across the international justice community, to know what to look for, what platforms might be hosting that information, to have the language skills to find it in the native languages of where these atrocities have taken place, and to know the rich array of tools that have been developed to help us with advanced searching across multiple social media sites.

In terms of how we verify it, and in this kind of information ecosystem, it’s so critical for us to understand the facts that we’re coming across. Of course, for human rights advocates, facts are our currency and that currency is being devalued right now, as more and more people throw doubt on the kinds of information that we’re finding online. Our reputations are critical for the legitimacy of the work that we’re doing. So we take a really detailed three-step process to verifying that content, which is very much spelled out in the protocol. The first is technical. So if we find a video or a photograph, we look to see, is there metadata? So information about that item and when it was created and how it was captured, that can help us confirm or disprove what we’ve been told about that video or photograph. Unfortunately, when a video or photograph is put up on social media, however, most social media companies will strip the metadata, the pieces that say what camera this was shot on, what the date was, what the geo-coordinates were of where the person was standing when they captured it. So, a big part of the process that we then engage in is really building that information back in. The first way we would do that is to look at the content of the video or the photograph, and see if what we are seeing in that video or photograph is consistent with what we’ve been told something is.

There’s a very, I would say, infamous video out there called “Syrian Hero Boy” that claims to be a young boy in Syria rescuing a young girl while they’re being fired at in a particular conflict. Many media were fooled into believing that that video was authentic, and circulated it, of course, saying that this is a really great example of people doing heroic things in times of crisis. However, it turned out that that was actually shot on a film set in Malta. And while the director had had really, I think, in support of what many of us would consider a very positive intent in shooting that film, he says it was to bring attention to the atrocities in Syria because the international community at the time really wasn’t paying as much attention as was warranted. I think it became deeply problematic and made people much more aware that they have to be careful in that verification process. The reporters who worked on that story and who shared that story noticed that there were signs in the visual imagery that suggested this was, in fact, Syria. However, the reporters that ultimately didn’t share that video further, were the ones that went into the third step of the process, which is to really analyze the source of that video. This can be the huge differentiator. So, you always want to trace the origins of a particular item back to the original person who shot it, and make an assessment of how reliable they are for that particular piece of content.

In terms of working with private tech companies, we’ve been talking with them at the Human Rights Center at Berkeley since about 2013, with regards to a lot of these issues, trying to help them understand how valuable a lot of the information that they host really can be for justice and accountability. A lot of the information that we’re interested in is the very information that they’re most likely to take down because it violates their terms of service or their user guidelines. It can often be very graphic material, very contested material. And they take it down for, sometimes, really good reasons. We don’t want people inadvertently exposed to information that will be emotionally upsetting or distressing, potentially even harmful. They also will take it down for privacy considerations. But that can be so valuable to establishing, not only the legal, but the historical record of some important moments, not only geopolitically, but in terms of human lives and human experiences. So a big part of what we’ve been increasingly working on is trying to figure out how that information doesn’t get destroyed, but somehow is preserved for later accountability efforts.

11:13 CHRIS DELATORRE: You touched on the importance of language skills—now this fascinates me. In essence we seem to be talking about learning a new language altogether. Now, you’re also working with technologists to improve the quality of information found on social media—by better identifying misinformation, for instance. You’ve mentioned an 89% success rate. This reminds me of a conversation we had with Niki Kilbertus, a researcher at Max Planck Institute for Intelligent Systems earlier this year. He says that creating a culture of inclusivity is just as important as getting the numbers and the predictions right. You’ve made clear that this protocol isn’t a toolkit per se but a set of living guidelines—which seems to leave some room for interpretation. First, how are you matching the speed and scale of online information to preserve this success rate? And second, how do you get a fact-based conversation going that’s not only accurate but also one that people can care about and really get behind?

12:19 ALEXA KOENIG: I love that interview with Niki, and this idea of creating a culture of inclusivity. I think that’s ultimately been one of the most exciting and rewarding things about working in the online open source investigations community. Many of the methods that we use for legal purposes were really pioneered by journalists. And I think you’re absolutely right that when we’re talking about lawyers and journalists working together, we often speak different languages, and have to come together and figure out how we collaborate more efficiently. When we’re talking about bringing computer scientists and ethicists and others into the conversation, it becomes even more incumbent on all of us to figure out how we translate our areas of practice, so that ideally, we’re all working together to create something that’s even stronger than what we could produce on our own. I think it’s at the intersection of those disciplines where true innovation can happen. And I think that the more we learn to communicate across those disciplines, the more we’re actually able to have some kind of human rights impact.

As you said, we’ve really had to focus on developing the protocol to be focused on principles and not tools, in part for that reason. A lot of the process was really trying to figure out what are the principles that regardless of your area of practice or your profession, are the ones that are common to sourcing facts in social media and other online places? The idea also was that because tools change so quickly, the pace of technology is such that if we were to focus on certain tools or platforms, it may be that Facebook today is a primary source of information, but we’re already seeing that begin to shift as people change where they communicate to places like Parler, et cetera.

So, in order to not have the protocol be basically outdated by the very time it was produced, we figured we would at least start setting the foundation of what people needed to know to communicate more effectively. A lot of that meant that we had to focus on, first, even define [inaudible] terminology, like what is an online open source investigation? How does that differ from online open source information generally? As lawyers, we love definitions. They help us understand what we’re talking about, but it also helps us a communication tool. As I mentioned earlier with the speed and scale, with that many tweets going out, that many hours of video to YouTube, that many posts to Facebook, it’s not a human scale where we can go through that much information just using our more traditional methods.

The Berkeley Protocol was launched in 2020 on the 75th anniversary of the Nuremberg Trials.

So increasingly, what the Justice and Accountability community has had to think about is how we can bring automation into the process. Marc Faddoul, and other researchers at UC Berkeley’s Information School and at the nonprofit, AlgoTransparency, have really tried to figure out ways to automate the detection of misinformation. So one project that we worked on this past fall with our human rights centers investigations lab, which is a consortium of students who are kind of doing this online fact-finding, and then verifying that information, has been to help try and clean up that information ecosystem by supporting their work to come up with an algorithm to detect misinformation online, and to try and basically make it as accurate as possible.

A big piece of how we get people to care, I think, really comes about figuring out how we help a general public merge both the ideas in their heads with the feelings in their hearts. So I think whenever we try and convince people about what has happened on the ground and a site of atrocity, is really about not only bringing in trustworthy data, but getting people to care about that data more generally. I think a lot of the research has shown that people care about people and not numbers. And they particularly care about people with whom they can identify and who remind them of themselves or those they love. So I think the storytelling part of this, that taking these disparate facts and videos and photographs, and bringing them back together like pieces in a puzzle to show a bigger picture, is a big part of what we’re trying to do—really merge that quantitative work with the qualitative storytelling.

16:20 CHRIS DELATORRE: Now let’s talk about your process. It has a lot in common with previous initiatives to develop standards around investigating torture, summary executions, sexual violence in conflict. As you’ve pointed out, these efforts were essential steps to help lawyers and judges really understand how to evaluate new investigative techniques, and to also guide first responders and civil society groups on how to legally collect information. How do you plan on getting the Berkeley Protocol into the hands of everyone using content to build human rights cases—technologists, data practitioners, activists, judicial servants? And lastly, how can our listeners get involved today?

17:06 ALEXA KOENIG: Thanks for asking all of that. I think getting this out really does depend on figuring out how we reach as broad a public as possible. And by public, I mean those audiences who can, and hopefully learn something from the protocol, and adopt and adapt pieces of it for the kinds of work that they’re trying to do in the human rights space. One of the biggest strategic decisions we made early on was to approach the United Nations Office of the High Commissioner for Human Rights, to see if they would be interested in coming on board and partnering with us in this bigger effort. They had increasingly been seeing, in their fact-finding teams, the need for deeper engagement with social media and other online information. And they have been an extraordinary asset and incredible partner in trying to build this from the ground up. It’s also really dependent on—we’ve done over 150 interviews with different kinds of experts in the space, whether they’re human rights investigators, war crimes investigators, journalists, technologists, etc., to better understand how the protocol could be designed to be as effective and as efficient as possible, but also to begin to answer the questions that as a community of practice, we knew none of us could answer on our own. So we also hosted a series of workshops to deal with some of the stickier unknowns, and begin to build the norms, and begin to build a networks that I think are really necessary for implementing some of the ethical guidelines and the principles that the protocol pulls together.

Another big piece of this has been ensuring its accessibility. So we’re really excited, this is being translated into every language of the United Nations. We’re hoping by, at the latest, late spring, we’ll be able to get this out to a much broader global audience. Of course, many of the communities that have been hardest hit by human rights, atrocities, et cetera, don’t necessarily speak English or French. And we wanted to make sure, from the outset, that this was something that people could use in very different parts of the world.

Another piece has been developing networks to support awareness and distribution, and of course, creating training so people even know how to use the protocol and how they can integrate it into their workflows. At this point, we’ve trained audiences as diverse as Interpol, parts of the International Criminal Court, different civil society groups, and activists all over the world, as well as war crimes investigators. We’ve also set up a professional training. We’re in partnership with the Institute for International Criminal Investigations, which tends to target investigative reporters and particularly war crimes and international criminal investigators, so that they ultimately know how some of these methods that were really pioneered by journalists can be brought in in a way that helps build those evidentiary foundations of cases.

And of course, I don’t want to leave out the students who have been extraordinary pioneers in helping us think through how this sort of new frontier of human rights investigations can really be run. They’ve been so central, whether they’re working with us at Berkeley or in other universities around the world, to thinking through the ethics piece of this. How do these methods adapt to different parts of the world in very different contexts? How do we make sure that this isn’t just some kind of elite exercise, but is something that really empowers the people who are most impacted, to get the information they want to share with the world out to people that they want to communicate it to and into the hands of people in positions of power who can do something to respond to the atrocities that they have been experiencing?

We’re also part of an international network known as Amnesty International Digital Verification Corps, which is a collaboration of seven universities around the world, trying to train students to be kind of a next generation of human rights defenders. And of course, we’re also working with student groups at places as diverse as Stanford or partners at UC Santa Cruz, UCLA, et cetera.

As far as how people can get involved, I think taking one of our trainings are some of the other amazing trainers out there from First Draft News, from Bellingcat, et cetera. Mostly, educating ourselves about how we responsibly deal with social media content, how we can do some basic fact-checking to know what we’re looking at and make sure we don’t share this information. Another would be to really maybe even sign up for our newsletter. We try and share information about different resources about once a month at humanrights.berkeley.edu. And finally, supporting the work. I think like many organizations doing this, we’re a relatively small nonprofit on the Berkeley campus, which is why the Digital Impact Grant from the Digital Civil Society Lab at Stanford was so incredibly invaluable to doing this work. So anyone who wants to follow some of the work that we’re doing or be in conversation about it, we would love to have you follow us on Twitter @HRCBerkeley or @KAlexaKoenig.

21:53 CHRIS DELATORRE: Alexa Koenig, Executive Director of the Human Rights Center at UC-Berkeley, thank you.

Digital Impact is a program of the Digital Civil Society Lab at the Stanford Center on Philanthropy and Civil Society. Follow this and other episodes at digitalimpact.io and on Twitter @dgtlimpact with #4Q4Data.