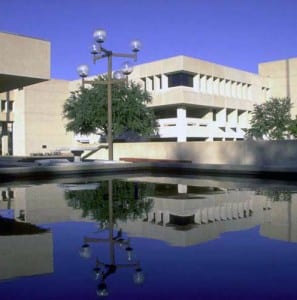

We’re glad to share this conversation with Dr. Murat Kantarcioglu of the University of Texas at Dallas Data Security and Privacy Lab (left). We caught up with him for a brief chat during his sabbatical and continuing research at Harvard University’s Data Privacy Lab in the Institute for Quantitative Social Science (IQSS). Often, in the talk of data privacy, the conversation understandably circles around security, technology or compliance. Dr. Kantarcioglu, however, takes us through a few critical, but less talked-about angles, particularly regarding data sharing.

We’re glad to share this conversation with Dr. Murat Kantarcioglu of the University of Texas at Dallas Data Security and Privacy Lab (left). We caught up with him for a brief chat during his sabbatical and continuing research at Harvard University’s Data Privacy Lab in the Institute for Quantitative Social Science (IQSS). Often, in the talk of data privacy, the conversation understandably circles around security, technology or compliance. Dr. Kantarcioglu, however, takes us through a few critical, but less talked-about angles, particularly regarding data sharing.

______

Eric J Henderson, Markets For Good (Eric): Data privacy is now an everyday topic, with certain themes now dominating the headlines, particularly those related to (1) Security: protection and control of data as well as associated technology and (2) Policy: regulations, laws, and compliance with it. We’re concerned about those, but, what’s missing from the privacy headlines?

Dr. Murat Kantarcioglu, Data Security and Privacy Lab (Murat): Security and compliance remain critical and, further, we must share data in order to solve problems, but we are missing the conversation on incentives. I believe that setting right incentives for data sharing is as important as addressing security and compliance issues.

…

Eric: You’ve spoken about the lack of mechanisms that bring privacy and data sharing together. What would robust mechanisms look like? Where are we now in the development of them?

Murat: As a part of our research, we are trying to understand how to provide proper incentives in privacy-preserving data sharing mechanisms. Our research actually started with the question on how different organizations can increase their data sharing to solve important problems. We realized that sometimes organizations use privacy as an excuse to not to share the data when they do not enough incentives. For these reasons, we advocate for a distributed infrastructure (even developed a prototype) where data can be analyzed securely and each participant compensated based on their contribution.

For example, if hospitals share their data with information exchanges and if such data sharing reduces medical costs due to improved care, they should get a compensation based on their data sharing activity. Such a scenario implies that we need easy to use, privacy-preserving data sharing systems that measure how much data is shared and the value of such data sharing to generate fair compensation for participants.

…

Eric: The logic is clear that data-sharing is indispensable for dealing with large and complex problems. But, what are the current barriers to the flow of high-quality data between entities?

Murat: I think understanding the potential utility and risks of data sharing is critical. In some cases, the general risk and cost profile on top of basic privacy concerns (security and compliance) do not combine to make an attractive scenario, despite the potential to solve problems or the prospect of developing a market.

Luckily, we have many technological mechanisms ranging from cryptographic tools to data sanitization techniques to reduce privacy and security risks. On the other hand, understanding utility of data sharing in advance is harder. Data needs to be shared and analyzed to understand its value. This uncertainty in the utility of sharing data must be somehow reduced to improve the current situation. I believe that as organizations engage in more data analytics projects, the uncertainty in the utility of sharing data will be reduced.

…

Eric: Anonymization is sometimes seen as a solution in and of itself, but you’ve noted a few problems with it. Can you tell us about those?

Murat: Anonymization/Sanitization techniques are backbone of the privacy conversation, up to enabling what some are calling data philanthropy – where private companies give anonymized data to social sector organizations, but it already has difficulties. These techniques are not perfect and if the anonymization is not done correctly, it may lead to attacks. Therefore, using other techniques such as access control (i.e., limit access to vetted organizations and individual) may be needed to further reduce the risks.

This question also flows from the conversation on incentives. If organizations are not incented or compensated to provide the best data, then perhaps they will not. This seems to a be a huge underlying issue as people talk of sharing and spill open new data sets, but there is less talk of data cleaning and data provenance for quality.

The question is how you reconcile privacy with the natural incentive that anonymity creates for lower integrity in data and collaborations.

Eric: Many thanks for your time, Murat.

<end>